DRIVER ASSISTANT SYSTEM

MAJOR PROJECT - 2024

Driver Assistance System

Road safety has always been an important concern for development and public safety since it is one of the main causes of injury and death across the world. Active engagement with the development of modern transportation systems, road safety measures, and other collaborations could assist in reducing the number of traffic accidents. The proposed research seeks to make a contribution significantly to the various initiatives to broaden Advanced Driver Assistance Systems (ADASs) and Autonomous Vehicles (AVs). It comprises a set up of six components: drowsiness recognition and alerting system, lane detection, lane departure warning system, lane maintaining assistance system, object detection and recognition, and collision warning system. The drowsiness detection and alert system is engaged throughout the ride to monitor the driver’s focus levels. Lane detection is then carried out using an ultrafast lane detector, that delivers spatial awareness by recognizing road lane markings. The lane departure system thus activates whenever the vehicle deviates from the lane without signaling, alerting the driver immediately. As the vehicle continues to deviate from the lane, the lane keeping assistance system detects and adjusts the vehicle’s steering to keep it in the lane. Concurrently, recognition and detection of objects applying YOLO recognize a wide range of items on the road, that serve as crucial in collision warning systems. The collision warning system employs OpenCV and distance measurement to monitor the vehicle’s surroundings and alert the driver about possible collision risks. The integration aims to enhance driver safety through providing timely warnings and offering assistance throughout the driving process.

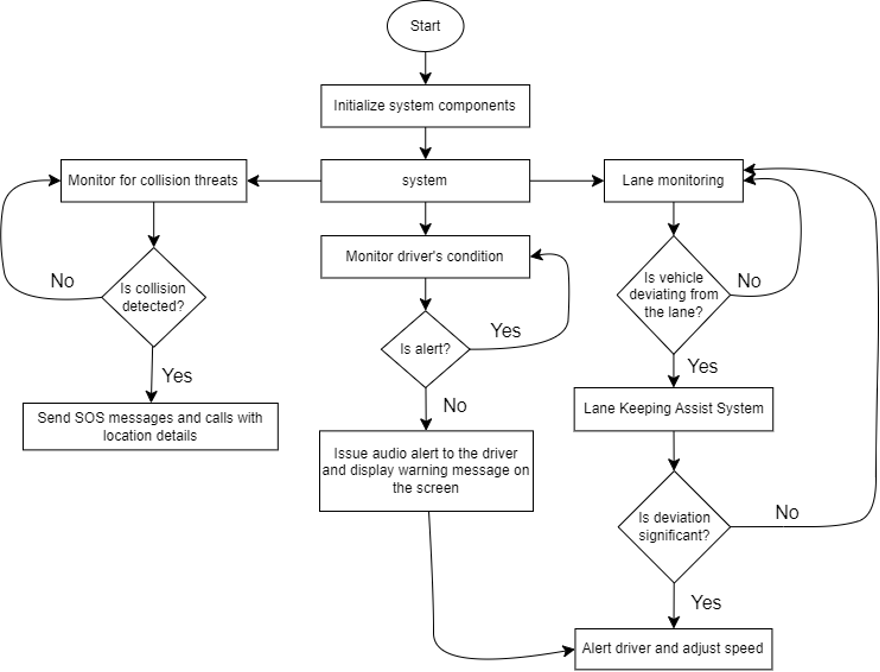

Proposed system

The proposed system has 2 point of views

- POV_1 -> Monitoring the external environment

- POV_2 -> Monitoring the internal environment

POV_1 : Monitoring the external environment

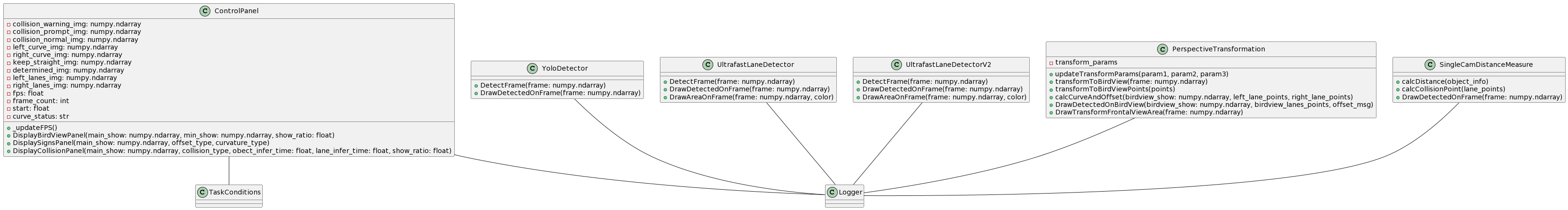

class UML diagram

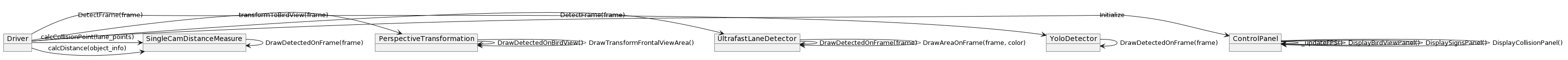

Collaboration UML diagram

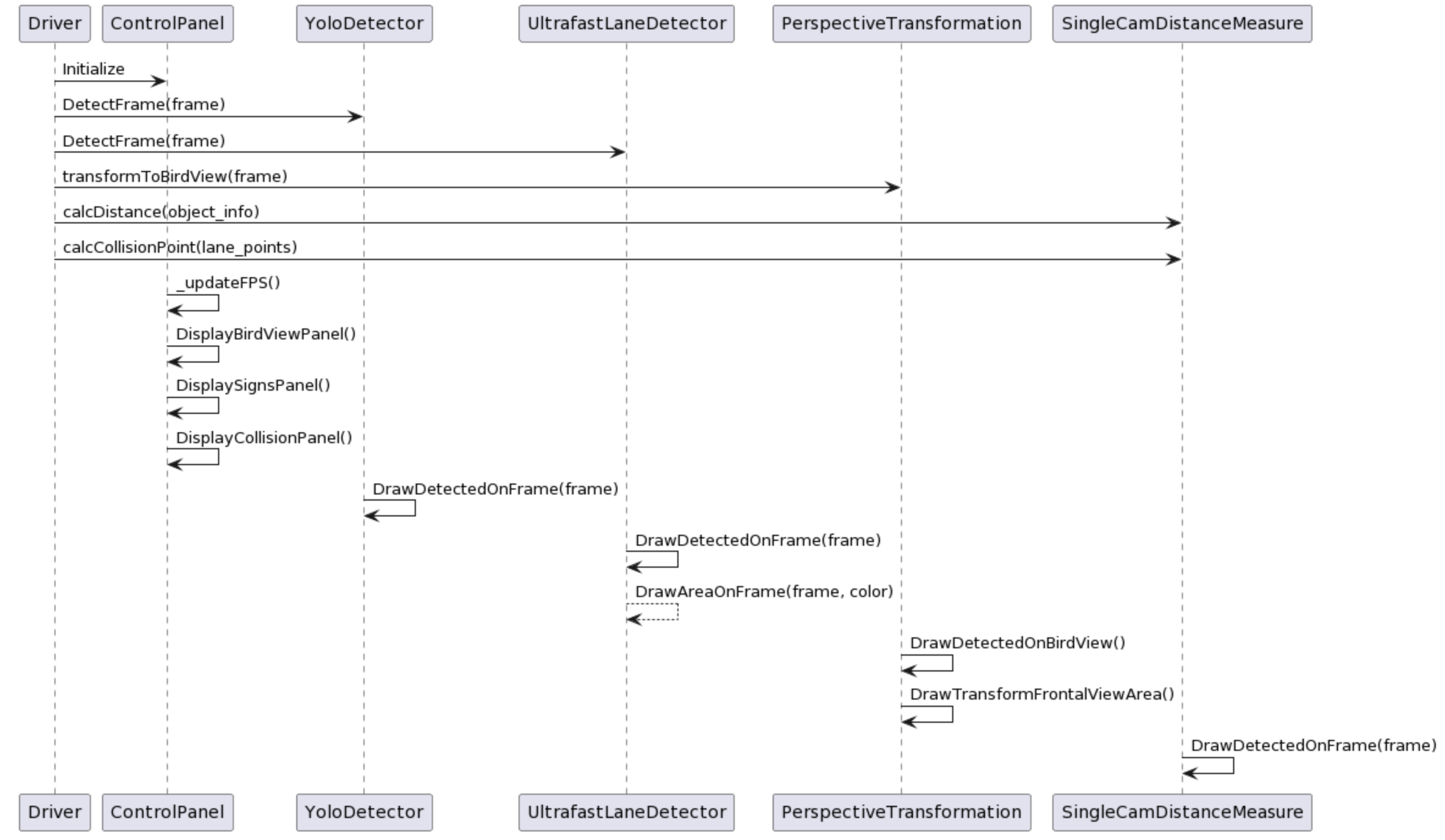

Sequence UML diagram

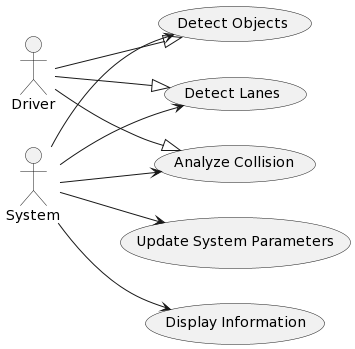

Usecase UML Diagram

POV_2 : Monitoring the internal environment

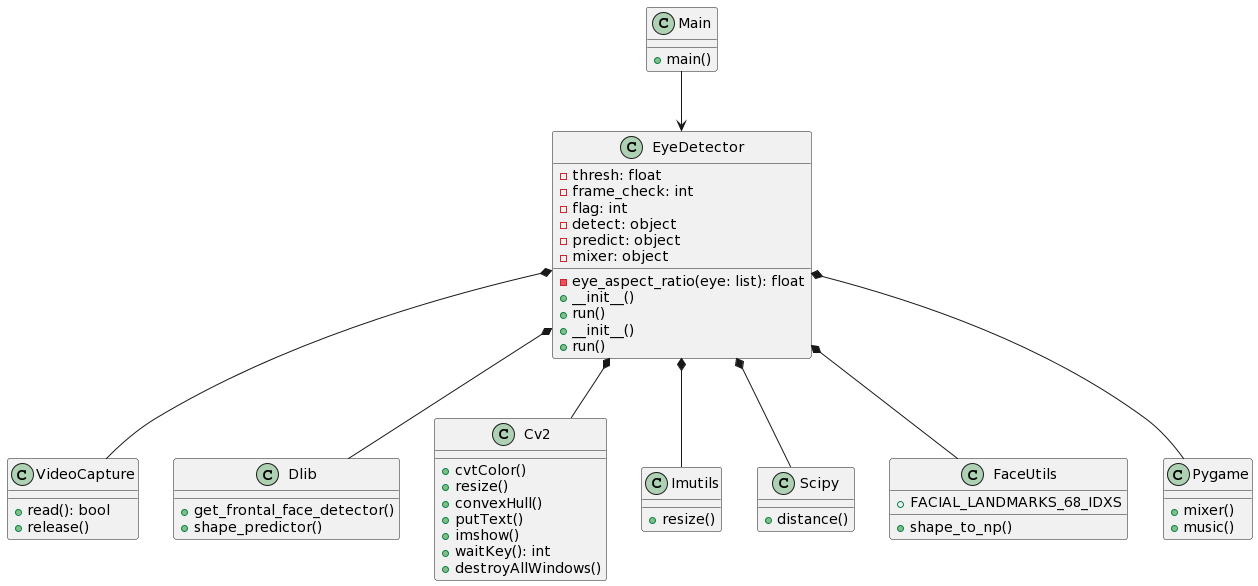

UML diagram

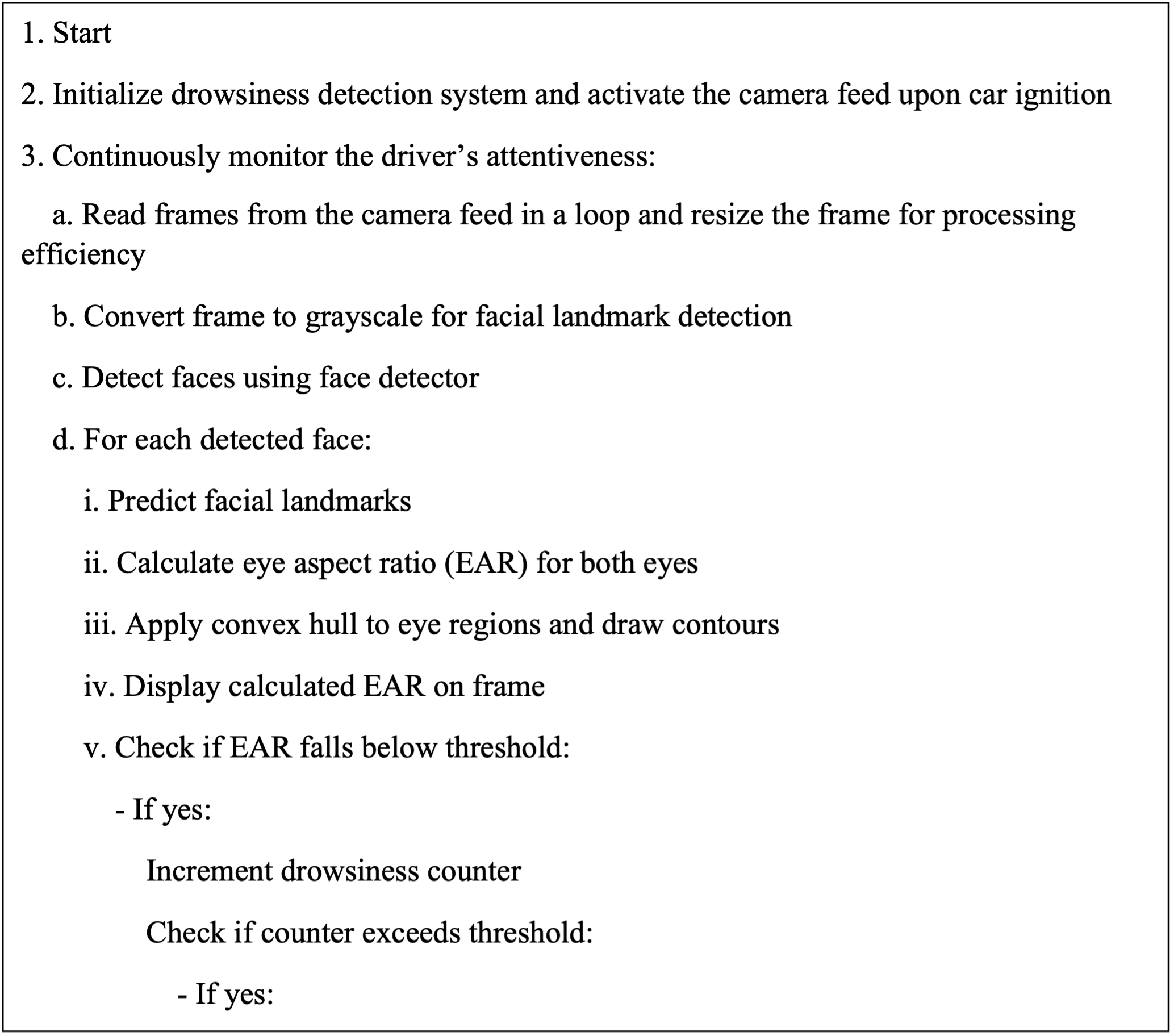

Psudo code

Code setup :

Requirements :

Python 3.7+

OpenCV, Scikit-learn, onnxruntime, pycuda and pytorch.

Install :

The

requirements.txtfile should list all Python libraries that your notebooks depend on, and they will be installed using:pip install -r requirements.txt

Examples :

Download YOLO Series Onnx model :

Use the Google Colab notebook to convert

Model release version Link YOLOv5 v6.2YOLOv6/Lite 0.4.0YOLOv7 v0.1YOLOv8 8.1.27YOLOv9 v0.1Convert Onnx to TenserRT model :

Need to modify

onnx_model_pathandtrt_model_pathbefore converting.python convertOnnxToTensorRT.py -i <path-of-your-onnx-model> -o <path-of-your-trt-model>Quantize ONNX models :

Converting a model to use float16 instead of float32 can decrease the model size.

python onnxQuantization.py -i <path-of-your-onnx-model>Video Inference :

- Setting Config :

Note : can support onnx/tensorRT format model. But it needs to match the same model type.

lane_config = { "model_path": "./TrafficLaneDetector/models/culane_res18.trt", "model_type" : LaneModelType.UFLDV2_CULANE } object_config = { "model_path": './ObjectDetector/models/yolov8l-coco.trt', "model_type" : ObjectModelType.YOLOV8, "classes_path" : './ObjectDetector/models/coco_label.txt', "box_score" : 0.4, "box_nms_iou" : 0.45 }Target Model Type Describe Lanes LaneModelType.UFLD_TUSIMPLESupport Tusimple data with ResNet18 backbone. Lanes LaneModelType.UFLD_CULANESupport CULane data with ResNet18 backbone. Lanes LaneModelType.UFLDV2_TUSIMPLESupport Tusimple data with ResNet18/34 backbone. Lanes LaneModelType.UFLDV2_CULANESupport CULane data with ResNet18/34 backbone. Object ObjectModelType.YOLOV5Support yolov5n/s/m/l/x model. Object ObjectModelType.YOLOV5_LITESupport yolov5lite-e/s/c/g model. Object ObjectModelType.YOLOV6Support yolov6n/s/m/l, yolov6lite-s/m/l model. Object ObjectModelType.YOLOV7Support yolov7 tiny/x/w/e/d model. Object ObjectModelType.YOLOV8Support yolov8n/s/m/l/x model. Object ObjectModelType.YOLOV9Support yolov9s/m/c/e model. Object ObjectModelType.EfficientDetSupport efficientDet b0/b1/b2/b3 model. - Run for POV_1:

python demo.py- Run for POV_2: (change the directory to Drowsiness detector)

python detect.py- Setting Config :

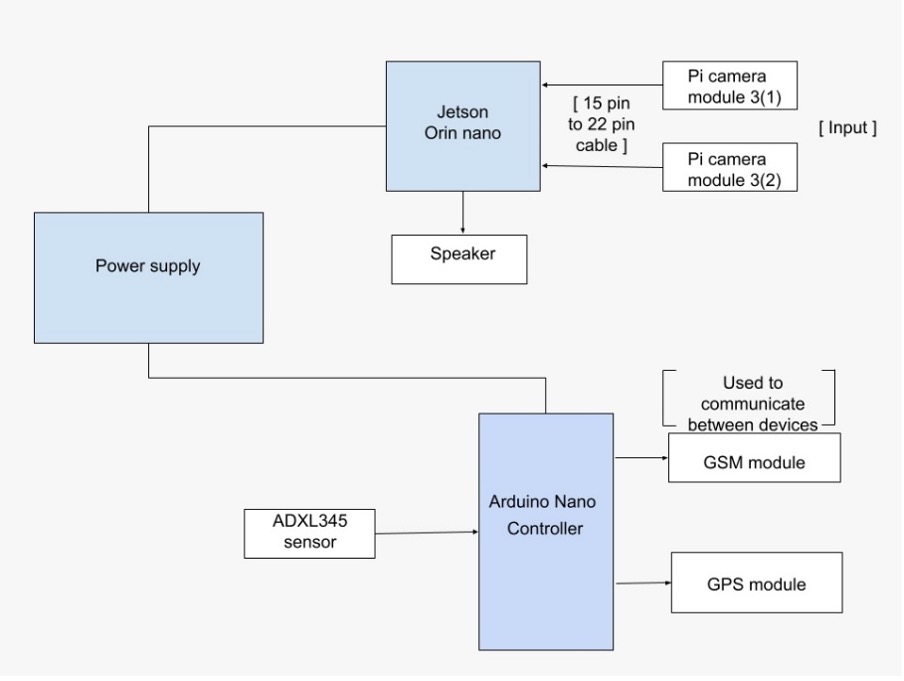

Hardware setup :

Hardware requirements :

1. Jetson orin nano

2. Servo motor

3. Pi camera module 3

4. Power bank (10000 to 20000 mAh)

5. Arduino Nano

6. ADXL - 345

7. GPS Neo - 6m

8. GSM SIM800I

9. LM2596 step converter

10. Zero PCB

11. 12v 2A Power supply

12. 15 pin to 22 pin cable

Hardware Integration :

Results :

POV_1 : Monitoring the external environment

- Front Collision Warning System :

- Lane Departure Warning System :

- Lane Keeping Assist System :

POV_2 : Monitoring the internal environment

[ EAR -> Eye aspect ratio ]

- Active driver :

- Drowsy driver :

This repository contains the submission for our project by Madadapu HemanthSai and Team, B. Tech CSE - AIML student from MLR Institute of Technology. The project encompasses solutions to problems demonstrating proficiency in machine learning and computer vision.

Submitted By

- Name: Team - 10

- Program: B.Tech CSE - AIML

- Institution: MLR Institute of Technology

- Contact:

- E-Mail: hemanthsai826@gmail.com

- Website: hemanth1403

Contributing

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.